From time to time we have customers asking us if MailWizz is suited for sending large volumes of emails.

Below, we tried to run some tests in order to see how MailWIzz performs when dealing with large amounts of data.

Servers

We ran our tests on DigitalOcean, using one droplet and one managed database instance.

For the droplet we choose Debian 12 / 96 GB RAM and 48vCPU, 100GB Disk, while for the database, we choose MySQL 8 with 128 GB RAM / 16vCPU / 3510 GB Disk.

While DigitalOcean prices for the above servers are not quite low, you can find larger servers for less money, for instance, Hetzner offers

AMD EPYC™ 7502P with 32 cores / 64 threads @ 2.5 GHz and 1024 GB RAM for about $620/mo.

We consider the servers we selected for this test to be in the lower bucket of medium sized servers.

Setup

On the web server, we used docker to install NGINX and PHP-FPM (PHP 8.1), nothing too fancy.

For the database server, since we're using a managed solution, we did nothing, we're using a single node.

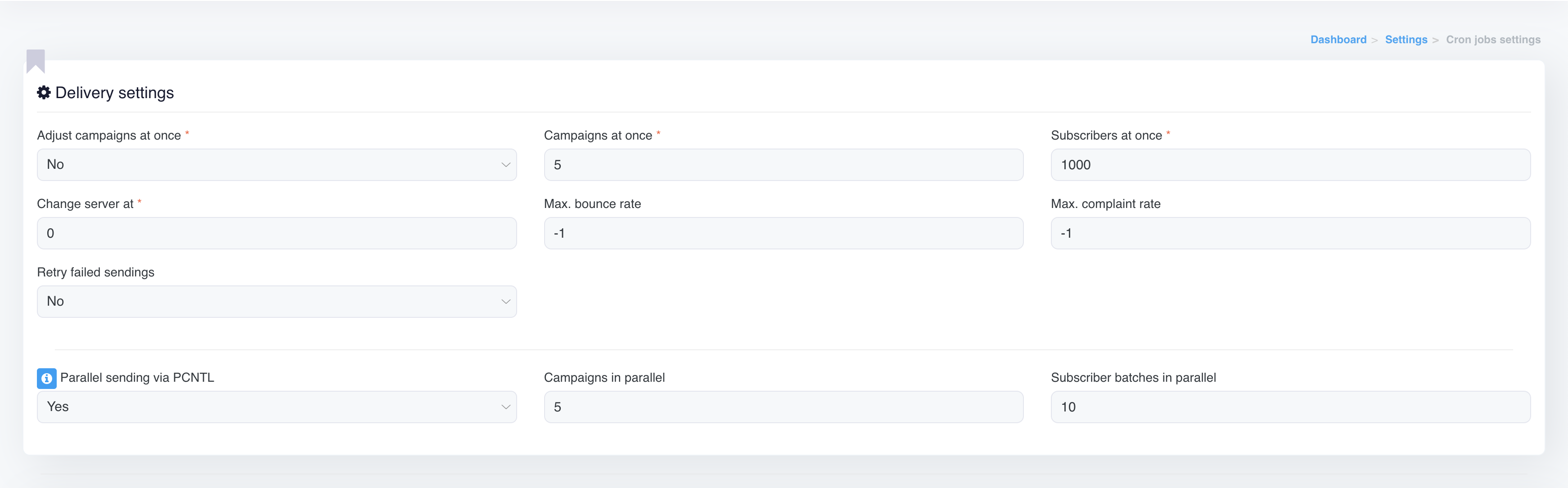

For MailWizz, we changed the default delivery settings to:

Given the size of the server we selected, we think these are decent settings, no need to push more to obtain good results.

Additionally, we're using the Dummy Mailer inside MailWizz which drops the emails, this way the results are not influenced by network latency and we're testing MailWizz itself not the network or the SMTP server.

We're using Queue Tables, as any high volume sender should. Queue Tables make sense only when you are a high volume sender, otherwise, they have too much overhead and should not be used.

Data sets

Using MailWizz's stress-test-create-list command, which is available since MailWizz v2.3.2, we created 80 lists with various subscribers sizes,

from 1k to 1M, in total, a bit over 20M subscribers:

mysql> select count(*) from mw_list_subscriber; +----------+ | count(*) | +----------+ | 20019723 | +----------+ 1 row in set (0.30 sec)

Each list has between 1 and 4 custom fields, which translates in a bit under 47M list field values:

mysql> select count(*) from mw_list_field_value; +----------+ | count(*) | +----------+ | 46885099 | +----------+ 1 row in set (0.60 sec)

We also used these lists to create a large number of campaigns, 201 to be more precise, so that we have plenty of data before we start our real tests.

The campaigns we created sent over 25M emails, to all list sizes mentioned above:

mysql> select count(*) from mw_delivery_server_usage_log; +----------+ | count(*) | +----------+ | 25683629 | +----------+ 1 row in set (0.37 sec)

Why not more you wonder?

First, we think 25M is a decent number for our test, we needed to stop somewhere.

Secondly, MailWizz has routines in place to remove records from many tables that have the tendency to become large.

It does so for campaigns that finished sending, you just need to enable this from Backend > Settings > Cron.

There are KB articles related to this, we won't go over these aspects now.

Running the tests

Let's start with 5 campaigns, each one sending to 10k subscribers:

mysql> select c.campaign_id, timestampdiff(second, c.started_at, c.finished_at) as send_duration_seconds, (select count(*) from mw_campaign_delivery_log where campaign_id=c.campaign_id) as subs_count, date_added, last_updated from mw_campaign c where `status`='sent' order by campaign_id desc limit 5; +-------------+-----------------------+------------+---------------------+---------------------+ | campaign_id | send_duration_seconds | subs_count | date_added | last_updated | +-------------+-----------------------+------------+---------------------+---------------------+ | 206 | 16 | 10000 | 2023-08-04 14:57:44 | 2023-08-04 14:58:17 | | 205 | 17 | 10000 | 2023-08-04 14:57:44 | 2023-08-04 14:58:34 | | 204 | 17 | 10000 | 2023-08-04 14:57:44 | 2023-08-04 14:58:34 | | 203 | 18 | 10000 | 2023-08-04 14:57:44 | 2023-08-04 14:58:52 | | 202 | 18 | 10000 | 2023-08-04 14:57:44 | 2023-08-04 14:58:52 | +-------------+-----------------------+------------+---------------------+---------------------+ 5 rows in set (0.00 sec)

This was pretty fast, it took under 20 seconds to process 50k subscribers.

Let's try 5 campaigns of different sizes 10k, 12.5k, 15k, 25k and 70k.

mysql> select c.campaign_id, timestampdiff(second, c.started_at, c.finished_at) as send_duration_seconds, (select count(*) from mw_campaign_delivery_log where campaign_id=c.campaign_id) as subs_count, date_added, last_updated from mw_campaign c where `status`='sent' order by campaign_id desc limit 5; +-------------+-----------------------+------------+---------------------+---------------------+ | campaign_id | send_duration_seconds | subs_count | date_added | last_updated | +-------------+-----------------------+------------+---------------------+---------------------+ | 211 | 117 | 70000 | 2023-08-04 15:00:01 | 2023-08-04 15:02:41 | | 210 | 57 | 25000 | 2023-08-04 15:00:01 | 2023-08-04 15:01:41 | | 209 | 41 | 15000 | 2023-08-04 15:00:01 | 2023-08-04 15:01:25 | | 208 | 41 | 12500 | 2023-08-04 15:00:01 | 2023-08-04 15:01:25 | | 207 | 17 | 10000 | 2023-08-04 15:00:01 | 2023-08-04 15:00:44 | +-------------+-----------------------+------------+---------------------+---------------------+ 5 rows in set (0.02 sec)

Even with different campaign sizes, we still get very good results, longest process time is obvious the one for the 70k campaign, under 2 minutes. So we've processed 132.5k subscribers in under 2 minutes. Not bad at all.

What about 5 campaigns, each with 100k subscribers?

mysql> select c.campaign_id, timestampdiff(second, c.started_at, c.finished_at) as send_duration_seconds, (select count(*) from mw_campaign_delivery_log where campaign_id=c.campaign_id) as subs_count, date_added, last_updated from mw_campaign c where `status`='sent' order by campaign_id desc limit 5; +-------------+-----------------------+------------+---------------------+---------------------+ | campaign_id | send_duration_seconds | subs_count | date_added | last_updated | +-------------+-----------------------+------------+---------------------+---------------------+ | 216 | 223 | 100000 | 2023-08-04 15:03:50 | 2023-08-04 15:08:27 | | 215 | 223 | 100000 | 2023-08-04 15:03:50 | 2023-08-04 15:08:27 | | 214 | 204 | 100000 | 2023-08-04 15:03:50 | 2023-08-04 15:08:08 | | 213 | 223 | 100000 | 2023-08-04 15:03:50 | 2023-08-04 15:08:27 | | 212 | 226 | 100000 | 2023-08-04 15:03:50 | 2023-08-04 15:08:08 | +-------------+-----------------------+------------+---------------------+---------------------+ 5 rows in set (0.07 sec)

It took under 230 seconds, or under 4 minutes, to process 500k subscribers.

Generally speaking, campaigns sending to 100k subscribers are considered medium-to-large campaigns given the fact most people don't have such large lists.

Lets push this more, 5 campaigns, each with 500k subscribers.

mysql> select c.campaign_id, timestampdiff(second, c.started_at, c.finished_at) as send_duration_seconds, (select count(*) from mw_campaign_delivery_log where campaign_id=c.campaign_id) as subs_count, date_added, last_updated from mw_campaign c where `status`='sent' order by campaign_id desc limit 5; +-------------+-----------------------+------------+---------------------+---------------------+ | campaign_id | send_duration_seconds | subs_count | date_added | last_updated | +-------------+-----------------------+------------+---------------------+---------------------+ | 221 | 1190 | 500000 | 2023-08-04 15:09:25 | 2023-08-04 15:31:43 | | 220 | 1190 | 500000 | 2023-08-04 15:09:20 | 2023-08-04 15:31:43 | | 219 | 1190 | 500000 | 2023-08-04 15:09:20 | 2023-08-04 15:31:43 | | 218 | 1190 | 500000 | 2023-08-04 15:09:20 | 2023-08-04 15:31:43 | | 217 | 1281 | 500000 | 2023-08-04 15:09:20 | 2023-08-04 15:31:24 | +-------------+-----------------------+------------+---------------------+---------------------+ 5 rows in set (0.30 sec)

Processing large campaigns, like the ones above, where each list has 500k subscribers is still very fast with MailWizz.

Longest process took 1281 seconds, that is under 22 minutes, to process 2.5M subscribers.

And that's not even all MailWizz can do. Let's see next.

Lets push this even more, 5 campaigns, each with 1M subscribers.

mysql> select c.campaign_id, timestampdiff(second, c.started_at, c.finished_at) as send_duration_seconds, (select count(*) from mw_campaign_delivery_log where campaign_id=c.campaign_id) as subs_count, date_added, last_updated from mw_campaign c where `status`='sent' order by campaign_id desc limit 5; +-------------+-----------------------+------------+---------------------+---------------------+ | campaign_id | send_duration_seconds | subs_count | date_added | last_updated | +-------------+-----------------------+------------+---------------------+---------------------+ | 226 | 2557 | 1000000 | 2023-08-04 15:33:37 | 2023-08-04 16:22:24 | | 225 | 2557 | 1000000 | 2023-08-04 15:33:37 | 2023-08-04 16:22:24 | | 224 | 2557 | 1000000 | 2023-08-04 15:33:37 | 2023-08-04 16:22:24 | | 223 | 2557 | 1000000 | 2023-08-04 15:33:37 | 2023-08-04 16:22:24 | | 222 | 2879 | 1000000 | 2023-08-04 15:33:37 | 2023-08-04 16:22:04 | +-------------+-----------------------+------------+---------------------+---------------------+ 5 rows in set (0.61 sec)

Processing 5M subscribers in parallel, for 5 different campaigns, took 2879 seconds, that is, under 50 minutes.

We consider this being faster than you'd generally need.

Info

As you can see from above stats, processing regular campaigns is very fast when using Queue Tables, even if you target a very high number of subscribers.

Processing 5M subscribers in under 50 minutes is really not something many self-hosted email marketing systems can do.

What about segmentation?

Segmenting by email address

MailWizz will be as fast as possible when segmenting on the email address, that case is very well optimized.

To demonstrate this, in one of our 1M lists, we segment the email field by the domain, we're interested only in hotmail.com addresses.

In our current list case, this means 166K subscribers, from a list of 1M.

mysql> select c.campaign_id, timestampdiff(second, c.started_at, c.finished_at) as send_duration_seconds, (select count(*) from mw_campaign_delivery_log where campaign_id=c.campaign_id) as subs_count, date_added, last_updated from mw_campaign c where `status`='sent' order by campaign_id desc limit 1; +-------------+-----------------------+------------+---------------------+---------------------+ | campaign_id | send_duration_seconds | subs_count | date_added | last_updated | +-------------+-----------------------+------------+---------------------+---------------------+ | 227 | 527 | 166891 | 2023-08-04 16:24:54 | 2023-08-04 16:33:56 | +-------------+-----------------------+------------+---------------------+---------------------+ 1 row in set (0.02 sec)

It took under 9 minutes and while this might not seem fast, remember, it is all about the number of subscribers MailWizz has to go through in order to get the right ones,

in this case, through 1M subscribers.

We still consider this to be acceptable.

Segmenting by random fields

In our 1M list, we can filter people aged 25 or more, where they have a hotmail.com email address and their name is not John.

In our current list case, this means 150K subscribers, from a list of 1M.

mysql> select c.campaign_id, timestampdiff(second, c.started_at, c.finished_at) as send_duration_seconds, (select count(*) from mw_campaign_delivery_log where campaign_id=c.campaign_id) as subs_count, date_added, last_updated from mw_campaign c where `status`='sent' order by campaign_id desc limit 1; +-------------+-----------------------+------------+---------------------+---------------------+ | campaign_id | send_duration_seconds | subs_count | date_added | last_updated | +-------------+-----------------------+------------+---------------------+---------------------+ | 228 | 645 | 150707 | 2023-08-04 16:35:52 | 2023-08-04 16:49:16 | +-------------+-----------------------+------------+---------------------+---------------------+ 1 row in set (0.02 sec)

Under 11 minutes for 150k subscribers segment, from a list of 1M, using 3 difficult segments.

While we don't consider this fast, we do consider it acceptable.

Info

Please note that you'll make segments slower with each condition you add to them.

MailWizz will allow you by default to add at most 3 conditions per segment to avoid any potential problem.

What about autoresponders?

Autoresponders will put less pressure on the system compared to regular campaigns, thus we think testing regular campaigns suffices.

One thing to keep in mind is that currently autoresponders cannot make use of the Queue Tables feature if they also use segments. This is a limitation we will fix in the near future.

Results from previous large campaigns

Remember, above we have processed 5 campaigns, each to 1M subscribers using Queue Tables.

To understand the importance of the Queue Tables feature when sending large volumes, compare it with the below where we didn't use Queue Tables:

mysql> select c.campaign_id, timestampdiff(second, c.started_at, c.finished_at) as send_duration_seconds, (select count(*) from mw_campaign_delivery_log where campaign_id=c.campaign_id) as subs_count, date_added, last_updated from mw_campaign c where `status`='sent' order by campaign_id desc limit 5; +-------------+-----------------------+------------+---------------------+---------------------+ | campaign_id | send_duration_seconds | subs_count | date_added | last_updated | +-------------+-----------------------+------------+---------------------+---------------------+ | 196 | 13820 | 1000000 | 2023-08-04 11:00:29 | 2023-08-04 14:51:54 | | 195 | 13820 | 1000000 | 2023-08-04 11:00:18 | 2023-08-04 14:51:54 | | 194 | 13820 | 1000000 | 2023-08-04 11:00:01 | 2023-08-04 14:51:54 | | 193 | 13820 | 1000000 | 2023-08-04 10:59:48 | 2023-08-04 14:51:54 | | 192 | 13668 | 1000000 | 2023-08-04 10:59:26 | 2023-08-04 14:49:01 | +-------------+-----------------------+------------+---------------------+---------------------+ 5 rows in set (0.62 sec)

Instead of taking under 50 minutes to process 5M subscribers, it took a bit under 4 hours.

The difference is huge and should clear any doubts about using Queue Tables when sending large volumes.

Conclusion

Sending high volume emails is always a challenge and depending on your data size and infrastructure, MailWizz will perform to expectations.

If you are a large volume sender, we can't highlight enough the importance of choosing the right hardware setup and enable all the cleanup features MailWizz offers. Where possible, use Redis and Queue Tables.

Also look into having multiple server nodes, where each node is a combination of one/many web/database servers.

Related blog post - 5 Ways To Improve Email List Management

Frequently Asked Questions

What is high volume email sending?

Are there risks in sending high volumes of emails?

How can I improve deliverability when I send mail in high volumes?

Can a high volume email campaign be automated?

Do you have questions?

We're here to help, please contact us and we will do our best to answer your questions as soon as possible.